Evangelos Kazakos1, Arsha Nagrani2, Andrew Zisserman2 and Dima Damen1

1University of Bristol, VIL, 2University of Oxford, VGG

Abstract

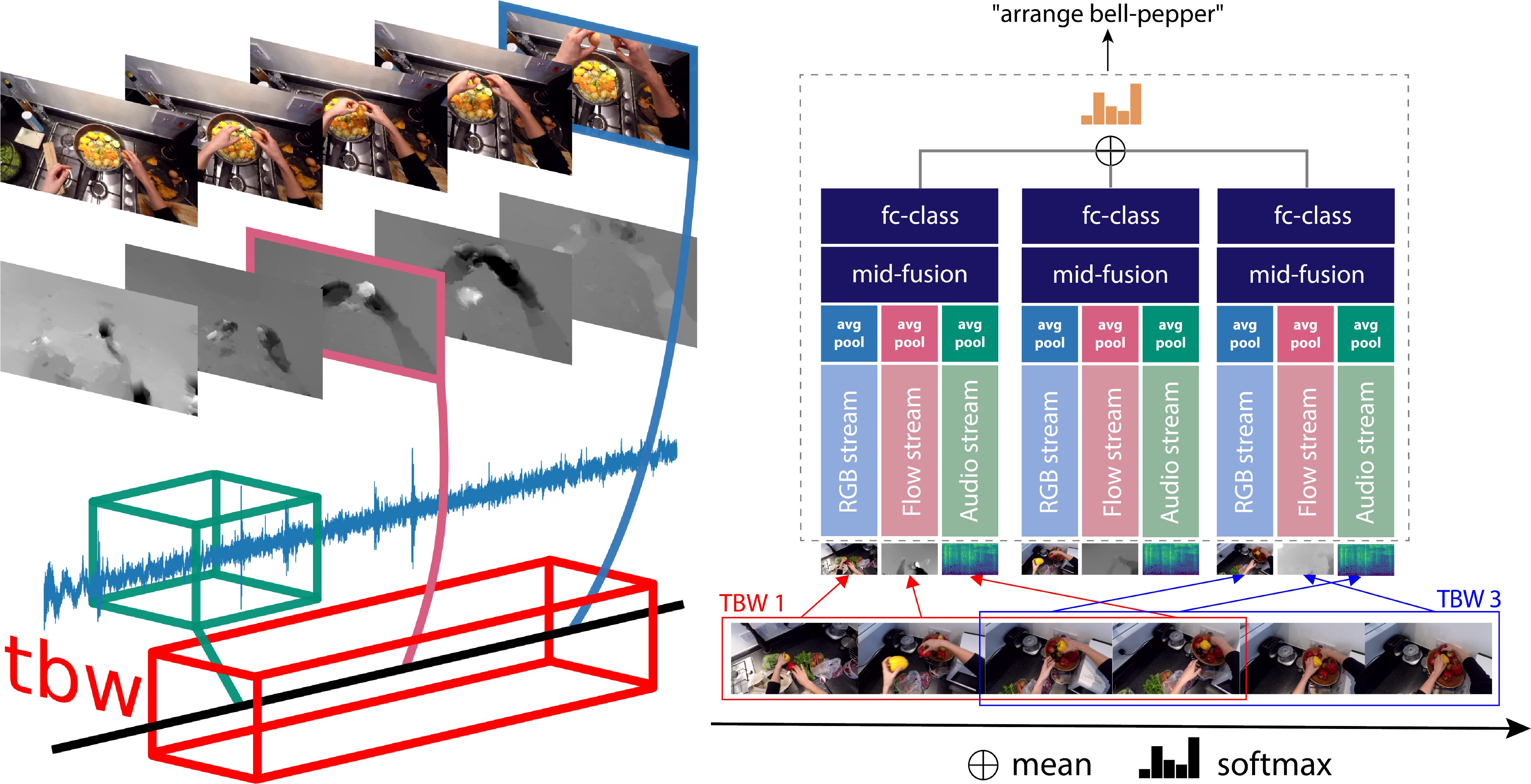

We focus on multi-modal fusion for egocentric action recognition, and propose a novel architecture for multi-modal temporal-binding, i.e. the combination of modalities within a range of temporal offsets. We train the architecture with three modalities – RGB, Flow and Audio – and combine them with mid-level fusion alongside sparse temporal sampling of fused representations. In contrast with previous works, modalities are fused before temporal aggregation, with shared modality and fusion weights over time. Our proposed architecture is trained end-to-end, outperforming individual modalities as well as late-fusion of modalities.

We demonstrate the importance of audio in egocentric vision, on per-class basis, for identifying actions as well as interacting objects. Our method achieves state of the art results on both the seen and unseen test sets of the largest egocentric dataset: EPIC-Kitchens, on all metrics using the public leaderboard.

Video

Invited talk @ Sight and Sound Workshop - CVPR2020

Downloads

Bibtex

@InProceedings{kazakos2019TBN,

author = {Kazakos, Evangelos and Nagrani, Arsha and Zisserman, Andrew and Damen, Dima},

title = {EPIC-Fusion: Audio-Visual Temporal Binding for Egocentric Action Recognition},

booktitle = {IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2019}

}

Acknowledgements

This research is supported by EPSRC LOCATE (EP/N033779/1), GLANCE (EP/N013964/1) & Seebibyte (EP/M013774/1). Evangelos is funded by EPSRC Doctoral Training Partnership, and Arsha by a Google PhD Fellowship.